You have arrived!

Has this happened to you? Your GPS announced the above, and your chosen destination was less than 10 metres away, but on the other side of a fence.

It is rare, but I recall at least three instances where over-reliance on the GPS has led me to dead-ends or closed roads. I had seen it coming, but rather than use my judgement, stop and seek help, I continued to believe the GPS. This over-reliance is "Automation Bias".

An excerpt from a BBC article elaborates on what Automation Bias is:

We aren't just bad at weighing up the trustworthiness of a machine, we're also easily seduced by mechanical objects when they start behaving a bit like a social partner who has our best interests at heart. So, in contrast to the person who has been stung by unresponsive computers and therefore distrusts them all, the individual who has learned to put their faith in certain systems – such as aircraft autopilots – may struggle to understand how they could be wrong, even when they are.

Why does this "Automation Bias" creep in?

Overconfidence in technology

Consider a hybrid workflow of income validation under the "P&L program" of a non-QM mortgage loan. As a first step, a machine extracts information from multiple P&L and Bank statements. It does it so well that we start believing it is infallible. Even if it is at 98% accuracy, we treat it as 100% accurate.

The complexity of solutions

The income validation solution is made of multiple components. As a second step, the extracted information is consumed by a downstream GenAI application which is at 95% accuracy. The overall solution accuracy now reduces to 0.95*0.98 = 0.931, or 93.1%. Unless explicitly informed about how the component interactions impact the overall accuracy, the end user continues to believe that the accuracy is at a much higher 98%.

Cognitive laziness

This excerpt from a study says: "It may not be surprising to find that diffusion of responsibility and social loafing effects also emerge in human-computer interaction." We do not validate the output from a spreadsheet by comparing it with a calculator, do we? We know the formulas are deterministic and as long as our algorithm was correct, we would get the right output. However, when we know that the output from the system might be probabilistic and not deterministic, even then, the social loafing and diffusion of responsibility kicks in. It is less demanding to accept the output from the machine than to verify it.

Combating the Automation Bias

Hybrid workflows allow us to derive the best value out of AI. Gen AI with its human-like output is an important component of these workflows. A side effect of this human-like system is that it encourages automation bias. So how shall we counterbalance this?

Encouraging Human and AI collaboration and not one-upmanship

In the software world, we use pair programming while implementing the most complex and critical systems. There is no cognitive laziness seen during pair programming. It encourages constant communication, discussion, and critical thinking about the code being written.

For the "Income Validator" component of a demo workflow we are building, we have taken inspiration from pair programming. We have implemented UI patterns to encourage the right amount of discussion and debate between humans and AI.

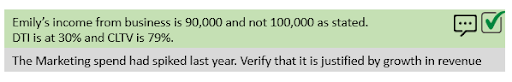

Consider one such UI pattern. The image has an output from the AI system during "Income Validation". The system has analysed the P&L and bank statements. It now presents the summary to the loan officer.

The colour code denotes the confidence level the AI system has in its output. The green box has output with 100% confidence, while the grey box denotes output with lesser confidence.

The loan officer, who is the consumer of AI, has the edit and approve buttons for this interaction. This pattern encourages accountability between the two partners by ensuring that:

- The system has to be designed for confidence levels. These questions have to be considered from the very beginning: How to choose what goes in green versus grey? How to ensure that what is displayed in green is really with 100% confidence? What happens if all we have is grey? Is the system still useful?

- The loan officer has to take ownership of the outcome. Unless they approve, the workflow does not move forward. The colour-coded output and the buttons encourage them to think and contribute.

Is this UI pattern enough to combat the automation bias?

Stay tuned, as we share our experiences in the upcoming blogs.

Talk to Us About Your Use Case